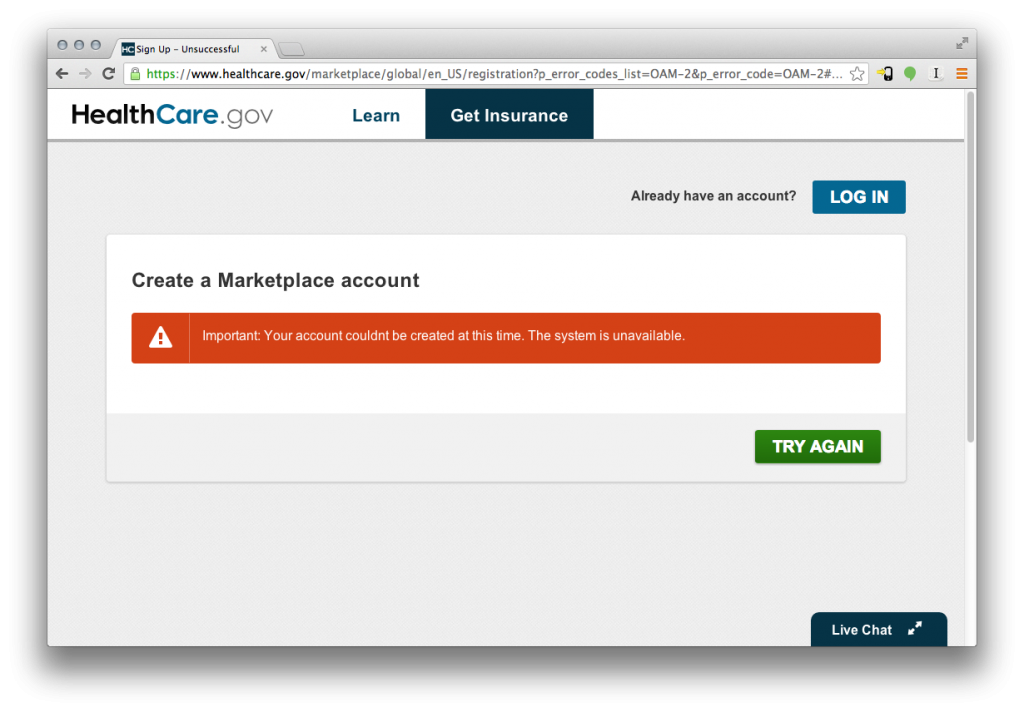

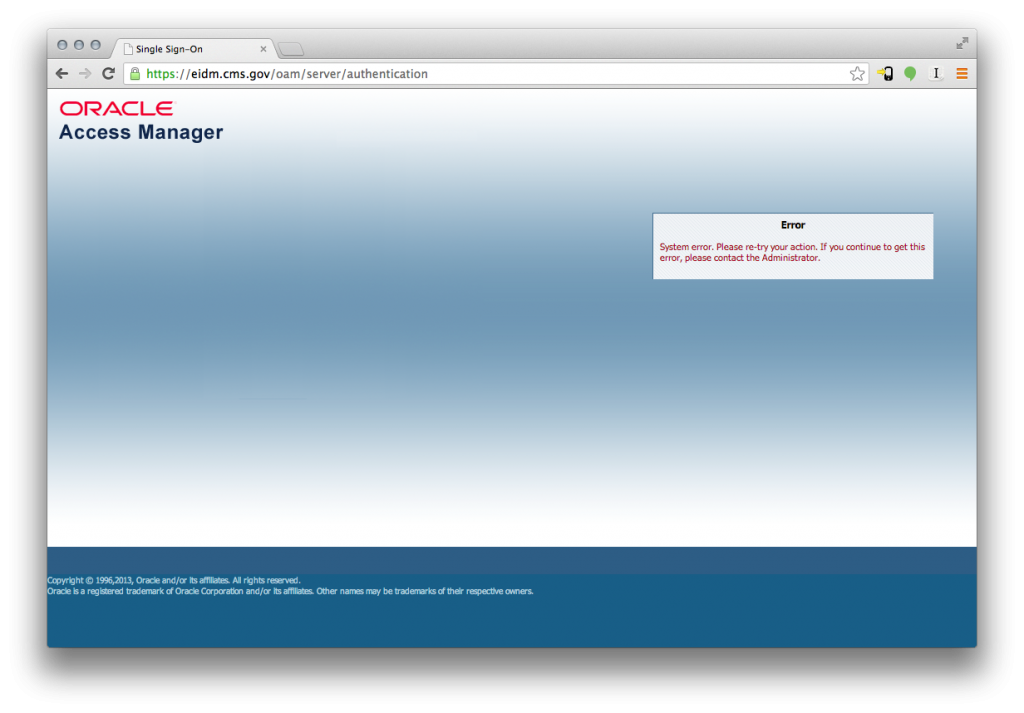

The last post looked at what may have accounted for the architectural instability of the healthcare.gov infrastructure and tried to learn from it. Recently HHS provided a brief overview of the problems and their efforts to resolve them, but clearly most questions will remain unanswered unless a full postmortem is ever made available. One issue that has received more notice lately is the role of open source in building out the exchanges. Originally this got attention with Alex Howard’s piece in the Atlantic from last June which emphasized the open source approach Development Seed had taken to develop the frontend. With this context many thought of the whole project as open source and were dismayed to discover otherwise. More recently there have been renewed questions about the open source nature of the project because of the removal of the Github repository that housed the code originally created by Development Seed.

It turns out there was a lot of open source thinking from the earliest days of building the exchanges. In fact, I was part of a team asked to help ensure the infrastructure was developed following open source best practices.

Idealistic Youth

In 2010 I helped co-found a project called Civic Commons as a partnership between OpenPlans and Code for America. Civic Commons was meant to provide human capacity and technical resources to help governments collaborate on technology, particularly through the release and reuse of open source software. Civic Commons itself was a collaboration among several non-profits, but initially it was also a close partnership with government. The project was supported by the District of Columbia with then CTO, Bryan Sivak, as one of the city projects in Code for America’s inaugural year. Unfortunately DC’s involvement didn’t survive through the District’s transition to a new administration, but Civic Commons continued on with the Code for America fellows (Jeremy Canfield, Michelle Koeth, Michael Bernstein) and both organizations working together.

In early 2011 the Code for America fellows met with then US CTO Aneesh Chopra and he asked them for assistance on an exciting new project.

“I need your help,” he began before sharing some recent news. He said it had just been announced earlier in the week that, “Seven states and one multi-state consortia will receive in aggregate $250M to build out insurance exchanges – they are required to align to principles, feed into verification hub, and engage in health philanthropy community.” Then he explained how we fit in, “Here is the specific ask, because I am big on open collaboration, I made a requirement that each awardee would have to join an open collaborative, a civic commons, have to join a commons for the reusability and sharing of all IP assets.” Aneesh went on to explain that it didn’t seem appropriate for the federal government to play this kind of role among the states and that he was really looking for an independent entity to help out. He said he was, “Looking for a commons that can act as the convening and tech support arm to the seven awardees – before the states go off and hire [big government contractor] who will set things up to minimize sharing, we want someone to set the rules of the road.”

At the time Civic Commons was just getting started and even though the prospect of a large and important project like this was very attractive it also seemed like it would consume all of our resources. After discussing concerns about our capacity and uncertainty of available funding to help, we decided against being involved. Much of the early work with Civic Commons was focused on more manageable projects among cities, but we did also include open source work at the federal level like the IT Dashboard. I do have a slight sense of regret that we couldn’t be more involved with open sourcing the exchanges, but it seems better that we learned what we learned from smaller experiments.

Lessons Learned

Karl Fogel was the primary shepherd of the open source process with governments at Civic Commons and one of his most notable blog posts detailed how difficult or even futile it was to try to do open source as an afterthought. If you’re not doing open source from the beginning then you’re probably not doing open source. Without the kind of organizational steward Aneesh was looking for, I fear those states might not have ever truly engaged in open source development as originally intended.

The other difficult lesson we learned through experiments getting cities and other governments excited about open source is that there tends to be much more motivation to release than to re-use. Some of this seemed like it may have been motivated by a PR driven sense that giving away your hard work looks good, but reusing others’ work looks lazy. Perhaps we need to do more to praise government when they are smart enough to not reinvent the wheel or pay for it over and over again.

The most encouraging lesson I learned during our time with Civic Commons is that there are some effective models for open source collaboration that involve very little direct coordination. The main model I’m referring to is one based around common standards and modular components. At OpenPlans we saw this with the success of open source projects based on the GTFS transit data standard like OpenTripPlanner or ones based on standardized protocols for real time bus tracking like OneBusAway. I’ve also watched this closely with the open source ecosystem that has developed around the Open311 standard with both open source CRMs and separate client apps like Android and iOS mobile apps that can be shared interchangeably. The full stack of open source tools from the City of Bloomington and the work Code for America has done with cities like Chicago have been great models that demonstrate the opportunities for software reuse when governments have asynchronously agreed on shared requirements by implementing a common standard. The apps developed by Bloomington are now even being used by cities in other countries.

The IT infrastructure for the exchanges was clearly based around common data standards, so you would hope the same opportunity would exist there.

An Open Exchange

The effort Aneesh had referred to did still continue without us and only now have I started to learn about it in detail. The $250 million he had described was more precisely $241 million in federal funding through the Early Innovator grants. These grants were awarded to Kansas, Maryland, Oklahoma, Oregon, New York, Wisconsin, and the University of Massachusetts Medical School representing the New England States Collaborative for Insurance Exchange Systems – a consortium among Connecticut, Maine, Massachusetts, Rhode Island, and Vermont. The grant was in fact as lofty as Aneesh had described to the fellows. The “Funding Opportunity Description” section of the grant states:

The Exchange IT system components (e.g., software, data models, etc.) developed by the awardees under this Cooperative Agreement will be made available to any State (including the District of Columbia) or eligible territory for incorporation into its Exchange. States that are not awarded a Cooperative Agreement through this FOA can also reap early benefits from this process by reusing valuable intellectual property (IP) and other assets capable of lowering Exchange implementation costs with those States awarded a Cooperative Agreement. Specifically, States can share approaches, system components, and other elements to achieve the goal of leveraging the models produced by Early Innovators.

The expected benefits of the Cooperative Agreements would include:

- Lower acquisition costs through volume purchasing agreements.

- Lower costs through partially shared or leveraged implementations. Organizations will be able to reuse the appropriate residuals and knowledge base from previous implementations.

- Improved implementation schedules, increased quality and reduced risks through reuse, peer collaboration and leveraging “lessons learned” across organizational boundaries.

- Lower support costs through shared services and reusable non-proprietary add-ons such as standards-based interfaces, management dashboards, and the like.

- Improved capacity for program evaluation using data generated by Exchange IT systems.

The grant wasn’t totally firm about open source, but it was still pretty clear. The section titled “Office of Consumer Information and Insurance Oversight: Intellectual Property” included the following:

The system design and software would be developed in a manner very similar to an open source model.

State grantees under this cooperative agreement shall not enter in to any contracts supporting the Exchange systems where Federal grant funds are used for the acquisition or purchase of software licenses and ownership of the licenses are not held or retained by either the State or Federal government.

It’s not totally clear what came of this. The last evidence I’ve seen of the work that came out of these grants is from a Powerpoint deck from August 2012. The following month a report was published by the National Academy of Social Insurance that provided some analysis of the effort. The part about code reuse (referred to as Tier 2) is not encouraging.

Tier 2: Sharing IT code, libraries, COTS software configurations, and packages of technical components that require the recipient to integrate and update them for their state specific needs.

Tier 2 reusability has been less common, although a number of states are discussing and exploring the reuse of code and other technical deliverables. One of the Tier 2 areas likely to be reused most involves states using similar COTS products for their efforts. COTS solutions, by their very nature, have the potential to be reused by multiple states. Software vendors will generally update and improve their products as they get implemented and as new or updated federal guidance becomes available. For instance, our interviews indicate that three of the states using the same COTS product for their portal have been meeting to discuss their development efforts with this product. Another option, given that both CMS and vendors are still developing MAGI business rules, is that states could potentially reuse these rules to reduce costs and time. CMS has estimated that costs and development could be reduced by up to 85 percent 32 when states reuse business rules when compared to custom development

When you’re simply talking about using the same piece of commercial software among multiple parties, you’re far from realizing the opportunity of open source. That said, the work developed by these states was really meant to be the foundation for reuse by others, so perhaps that was just the beginning. We do in fact have good precedent for recent open source efforts in the healthcare space. Just take a look at CONNECT, OSEHRA, or Blue Button.

Maybe there actually has been real re-use among the state exchanges, but so far I haven’t been able to find much evidence of that or any signs of open source code in public as was originally intended. Then again, there’s still time for some states to open up their own exchanges, so maybe we’ll see that over time. Right now the attention isn’t on the states so much anyway, but it turns out the Federally Facilitated Marketplace was supposed to be open source as well.

I’m not just talking about the open source work by Development Seed that recently disappeared from the Centers for Medicare & Medicaid Services’ Github page, I’m also talking about the so called, “Data Hub” that has been a critical component of the infrastructure – both for the federal exchange and for the states. The contractor that developed the Data Hub explains it like this:

Simply put, the Data Services Hub will transfer data. It will facilitate the process of verifying applicant information data, which is used by health insurance marketplaces to determine eligibility for qualified health plans and insurance programs, as well as for Medicaid and CHIP. The Hub’s function will be to route queries and responses between a given marketplace and various data sources. The Data Services Hub itself will not determine consumer eligibility, nor will it determine which health plans are available in the marketplaces.

So the Data Hub isn’t everything, but it’s clearly one of the most critical pieces of system. As the piece of integration that ties everything together, you might even call it the linchpin. What appears to be the original RFP for this critical piece of infrastructure makes it pretty clear that this was meant to be open source:

3.5.1 Other Assumptions

The Affordable Care Act requires the Federal government to provide technical support to States with Exchange grants. To the extent that tasks included in this scope of work could support State grantees in the development of Exchanges under these grants, the Contractor shall assume that data provided by the Federal government or developed in response to this scope of work and their deliverables and other assets associated with this scope of work will be shared in the open collaborative that is under way between States, CMS and other Federal agencies. This open collaborative is described in IT guidance 1.0. See http://www.cms.gov/Medicaid-Information-Technology-MIT/Downloads/exchangemedicaiditguidance.pdf.This collaboration occurs between State agencies, CMS and other Federal agencies to ensure effective and efficient data and information sharing between state health coverage programs and sources of authoritative data for such elements as income, citizenship, and immigration status, and to support the effective and efficient operation of Exchanges. Under this collaboration, CMS communicates and provides access to certain IT and business service capabilities or components developed and maintained at the Federal level as they become available, recognizing that they may be modified as new information and policy are developed. CMS expects that in this collaborative atmosphere, the solutions will emerge from the efforts of Contractors, business partners and government projects funded at both the State and federal levels. Because of demanding timelines for development, testing, deployment, and operation of IT systems and business services for the Exchanges and Medicaid agencies, CMS uses this collaboration to support and identify promising solutions early in their life cycle. Through this approach CMS is also trying to ensure that State development approaches are sufficiently flexible to integrate new IT and business services components as they become available.

The Contractor’s IT code, data and other information developed under this scope of work shall be open source, and made publicly available as directed and approved by the COTR.

The development of products and the provision of services provided under this scope of work as directed by the COTR are funded by the Federal government. State Exchanges must be self-funded following 2014. Products and services provided to a State by the Contractor under contract with a State will not be funded by the Federal government.

So far I haven’t been able to find any public code that looks like it would be the open source release of the Data Hub, but I remain optimistic.

Open source software is not a silver bullet, but it does a lot to encourage higher quality code and when coordinated properly it can do a great deal to maximize the use of tax dollars. Beyond the transparency of the code itself, it also helps provide transparency in the procurement process because it makes it easier to audit claims about what a company’s software is capable of and what software a company has already produced. It also tends to select for a culture and an acumen of software engineers that is genuinely driven to work with others to push the capability and impact of technology forward.

I hope we can stay committed to our obligations to maximize the tax dollars and ingenuity of the American people and stay true to the original vision of the exchanges as open source infrastructure for the 21st century.